Resource: TechTarget

Our Analytics Team needs to adapt quickly when it comes to changes on a project, which is why they’ve been relying on the Google Cloud Platform (GCP) services. The GCP enables our Analytics Team to implement many of its projects to the business seamlessly and is a key part of their project plans. Google recently hosted a Cloud OnBoard session in Charlotte and some of our analytics and IT associates were able to participate. This full-day learning event provided users with an overview of the GCP and best practices so they can get the most out of the services offered.

For our analytics and IT teams, the GCP is a valuable resource. The platform gives developers the ability to switch to the service that is the best viable option, based on the project requirements. This saves our team from having to build a service from scratch and use valuable time. One of the key advantages of using a cloud platform is not having to deal with the required maintenance of a physical infrastructure for a specific service.

One of the services highlighted in the GCP training was BigQuery. This is Google’s fully managed and serverless data warehouse service, which is a building block for many of our analytics projects.

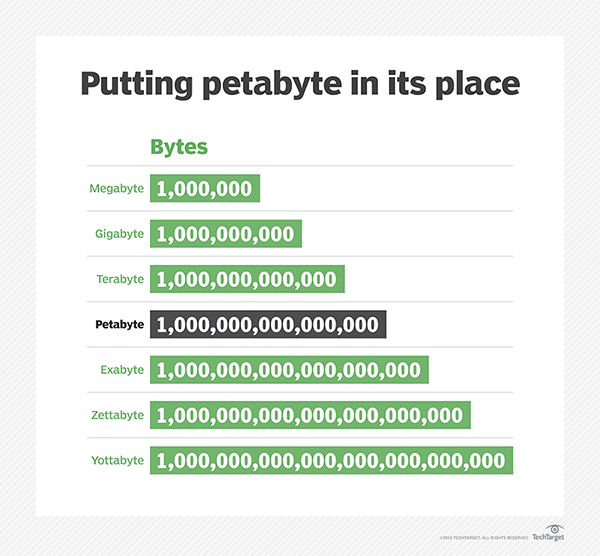

BigQuery offers several advantages over a traditional data warehouse. First, it doesn’t require the maintenance and administration of any physical hardware. Second, the storage and computation are decoupled from each other. This setup provides users the ability to scale variables independently and on demand. BigQuery has the ability to scan data very quickly, up to petabytes (1M gigabytes) per second. Being able to scan data at this speed would be very expensive with a traditional data warehouse or may not even be possible, depending on the IT infrastructure of the business. This service provides greater flexibility and cost control when scanning through data. The on-demand functionality allows our team to scan data when it’s needed, rather than keeping an expensive cluster running constantly.

Another great advantage of BigQuery is the different way a user can interact with the service, such as using SQL through the BigQuery web UI or bq command-line tool. These options give data analysts that are more familiar with traditional SQL, and prefer to use python or other programming languages, to integrate BigQuery data processing function in their projects.

During the training session, a key area of discussion was getting a new user comfortable with the GCP and understanding the services it can provide. For new users, there are potential risks of running into unexpected charges because of a misunderstanding in the pricing rules that Google Cloud follows. For example, on BigQuery, the instructor emphasized the importance of understanding the pricing structure on the pricing page and to leverage the BigQuery best practice guide in order to help drive down the cost.

Often a simple action that feels harmless can be very expensive if a user is not aware of the costs associated with an action. For example, selecting multiple, or even all of the columns from a table, is a common practice in a traditional data warehouse environment. However, this action is to be avoided in BigQuery and only the columns that you need should be selected when performing a query. The reasoning is that the cost of the operation depends on the scanned data size. You can also preview the query before running them, which allows you to estimate the cost.

If you’re just starting to explore the cloud, we recommend attending a Cloud OnBoard session. You can search for upcoming sessions here. If you’re unable to attend an in-person training or want to explore on your own, QWIKLABS can be extremely helpful. This platform offers a series of tutorials, each exploring a different GCP service that is associated with a temporary project. This allows users to learn how to use many of the services the platform offers while keeping costs to a minimum.

We look forward to sharing more information in future blogs on how our IT and Analytics Team leverage the Google Cloud Platform and the various services it has to offer, to help keep our customers connected to the road ahead.